Aerospace Human Factors and AI [part 5/6]

Understanding EASA’s Building Block #3: A Deep Dive into Regulatory Compliance in Aviation

To unveil the relationship between Human Factors and AI, I continue my journey by introducing Block #3 of the "EASA Concept Paper: Guidance for Level 1 & 2 Machine Learning Applications", which refers to the Human Factors of AI.

But, how will AI requirements be incorporated into Aviation Human Factors programmes?

The EASA concept paper provides initial Human Factors guidance for designing AI systems, with a strong focus on AI Explainability and Human-AI Teaming.

This is the fifth article in the six-part series about Human Factors (HF) and AI, focusing particularly on understanding EASA's Building Block #3:

Part 1 - Introduction to Aviation Human Factors (HF)

Part 2 - The Relationship Between Human-Centred AI (HCAI) and Aviation Human Factors

Part 3 - AI's positive Impact on Aviation Human Factors

Part 4 - Emerging Human Factors Challenges Due to AI Adoption in Aviation

Part 5 - Understanding EASA's Building Block #3: A Deep Dive into Regulatory Compliance

Part 6 - Future Directions and Integration Strategies for HF and AI in Aviation

Hence, today’s article will focus on:

AI Explainability requirements

Human-AI Teaming

Conclusions

Let’s dive in! 🤿

AI Explainability requirements

As I introduced in part 4 of this series, “Aerospace Human Factors and AI [part 4/6]”, there are potential Human Factors issues that could be introduced by the adoption of AI.

I split them into four layers: Organisation; Supervision; Immediate Environment; Individual.

Regarding the Immediate Environment, I identified the following 6 contributing factors to Human Error:

AI System Design and Interface: Poorly designed interfaces and unclear system feedback can cause user confusion, increasing the likelihood of operational errors.

AI System Reliability and Trust: Inconsistent or unreliable AI recommendations can erode trust in the system, causing engineers to disregard valuable AI insights or overly rely on flawed outputs.

Complex Decision-Making: AI can handle more complex data, which might be beyond users’ comprehension, leading to judgement or execution errors.

Data Bias and Misinterpretation: AI systems can perpetuate biases from their training data, resulting in poor decisions.

Unexpected Failure Modes: Broadening AI’s remit increases the chance of unforeseen failures, for which users might not be prepared.

Transparency: As AI becomes more complex, it operates more like a "black box," hindering user trust and verification, leading to errors.

In essence, these factors pertain to AI Explainability.

AI Explainability involves providing end users with understandable information on how the AI system arrived at its results.

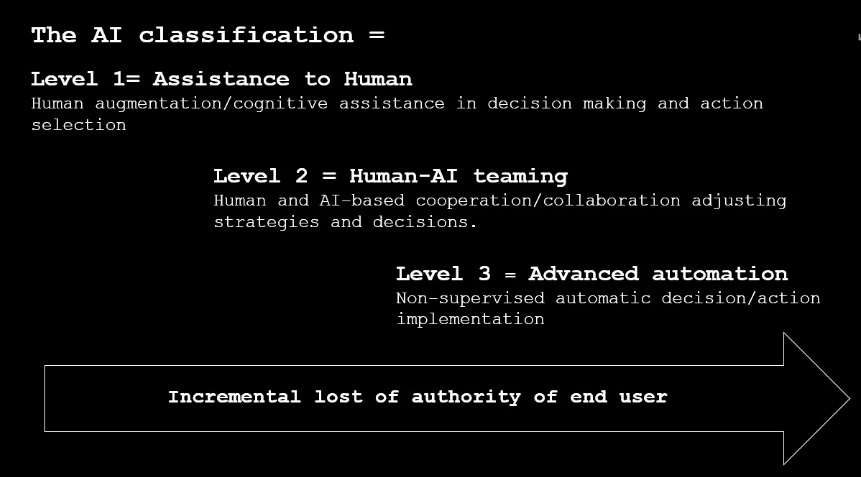

The principles are that as user authority diminishes, EASA develops additional objectives and adaptations to account for specific end-user needs linked to the introduction of AI-based systems.

AI Explainability remains paramount.

Hence, new design principles and goals are introduced as the AI level increases, based on the evolving interaction between the end-user and the AI-based system.

Trust in the AI-based system is also vital.

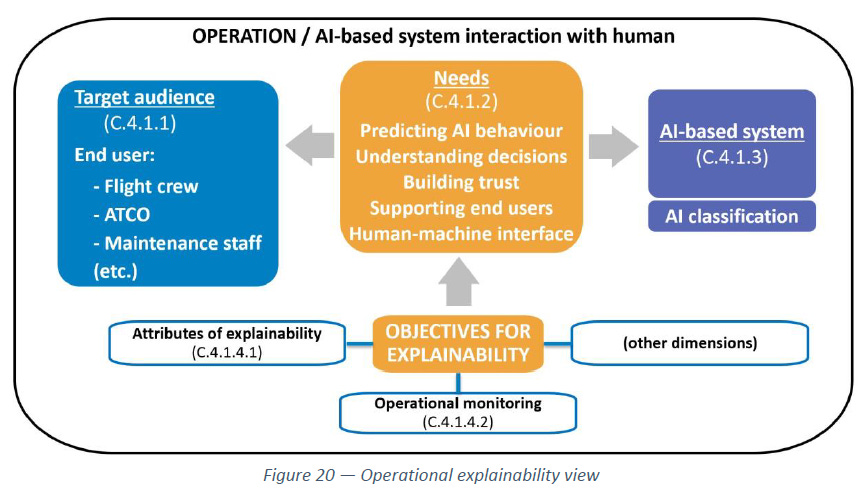

Target audiences for AI Explainability include but are not limited to:

Flight Crew

Air Traffic Control Officers (ATCO) in the ATM domain

Maintenance engineers in the Maintenance domain

Hence, to liaise with Human Factors in AI, the EASA concept paper develops objectives in the following areas:

AI Operational Explainability

Monitoring of the Operational Design Domain (ODD) and of the output confidence in operations

Human-AI Teaming

The concept of Human-AI Teaming emphasizes the cooperative and collaborative interaction between end users and AI-based systems to achieve common goals.

Depending on the maturity level of the AI system, this interaction can involve a shared understanding of goals, roles, and decision-making processes.

Effective Human-AI Teaming requires developing trust and effective communication.

With the evolution towards Level 2 AI applications, guidance is necessary for effectively implementing HAT.

The guidance distinguishes between cooperation and collaboration to clarify AI levels and provide new modes of operation:

For EASA, difference between both are:

Human-AI Cooperation (Level 2A)

Role: AI assists the user in achieving their goal.

Task Allocation: Predefined tasks with informative feedback.

Approach: Directive, AI guides the user.

Situation Awareness: No shared awareness needed.

Human-AI Collaboration (Level 2B AI)

Role: User and AI work together to achieve a shared goal.

Task Allocation: Real-time adjustments based on shared situation awareness.

Approach: Co-constructive, requiring joint efforts.

Situation Awareness: Shared awareness required.

Conclusions

As introduced in “Aerospace Human Factors and AI [part 1/6]” there are different groups of human errors: slips and lapses; mistakes; violations.

EASA emphasises that AI must be designed to tolerate human error. However, as I have presented, the integration of AI-based systems introduces new error types.

EASA's concept paper emphasizes AI Explainability and Human-AI Teaming to ensure safety and trust:

AI Explainability is crucial. Complex AI systems can erode trust, cause operational errors, and overwhelm users with data. EASA advocates for clear explanations of AI outputs, awareness of system limitations, and communication of output reliability to build trust and effectiveness.

Human-AI Teaming enhances collaboration. EASA distinguishes between AI assisting users with predefined tasks (Level 2A) and AI working with users towards shared goals (Level 2B). Success depends on developing trust and maintaining clear communication.

Future directions include enhancing training, fostering feedback loops, updating regulations, focusing on user-centered design, and continuing research.

The following will continue this series with a focus on future directions and integration strategies for HF and AI in aviation.

Stay tuned for an in-depth discussion on the strategic approach of merging human factors with AI as the landscape within aerospace moves forward.

This is all for today.

See you next week 👋

Disclaimer: The information provided in this newsletter and related resources is intended for informational and educational purposes only. It reflects both researched facts and my personal views. It does not constitute professional advice. Any actions taken based on the content of this newsletter are at the reader's discretion.