Aerospace Human Factors and AI [part 4/6]

Emerging Human Factors Challenges Due to AI Adoption in Aviation.

AI won’t be different.

As with any emerging technology, AI brings advancements as well as new risks and challenges. In aerospace, where a risk-based approach is followed, human factors must always be considered as they can impact human performance.

This is why it is so important to design AI-based systems or tools around humans, leading to the concept of Human-Centred AI (HCAI). Can you see how everything correlates now?

In the article "Aerospace Human Factors and AI [Part 1/6]", I introduced the concept of human factors that can affect human performance, using the MEDA model in the maintenance domain as an example and highlighting its synergies with HCAI.

This is the fourth article in the six-part series about Human Factors (HF) and AI, focusing particularly on emerging human factors challenges due to AI adoption in aviation.

Part 1 - Introduction to Aviation Human Factors (HF)

Part 2 - The Relationship Between Human-Centred AI (HCAI) and Aviation Human Factors

Part 3 - AI's positive Impact on Aviation Human Factors

Part 4 - Emerging Human Factors Challenges Due to AI Adoption in Aviation

Part 5 - Understanding EASA's Building Block #3: A Deep Dive into Regulatory Compliance

Part 6 - Future Directions and Integration Strategies for HF and AI in Aviation

Today’s focus is:

Emerging Human Factors due to the adoption of AI systems

Conclusions

Let’s dive in! 🤿

Emerging Human Factors due to the adoption of AI systems

Which could be the potential new Human Factors that could be introduced by adopting AI?

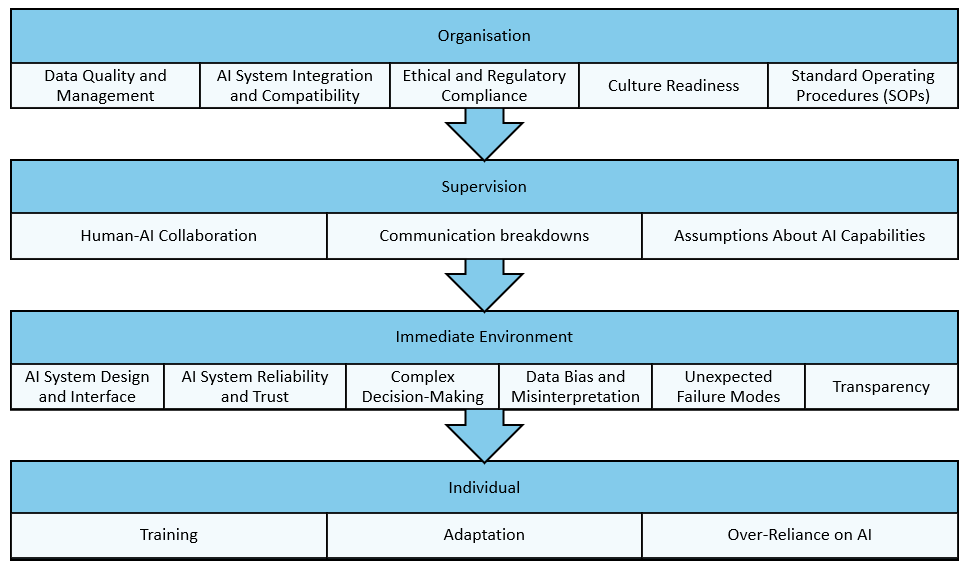

I have identified initial potential Contributing Factors to be added to the MEDA model already presented in the following categories:

Individual Factors

Training: Insufficient training on AI's capabilities and limitations can lead to misuse or misunderstanding of AI tools, resulting in errors and inefficiencies.

Adaptation: Engineers may struggle to adapt to AI technology, leading to resistance to its use or improper implementation, which can compromise safety and performance.

Over-Reliance on AI: Users may depend too much on AI without understanding its limitations, increasing error risk when AI fails.

Immediate Environment

AI System Design and Interface: Poorly designed interfaces and unclear system feedback can cause user confusion, increasing the likelihood of operational errors.

AI System Reliability and Trust: Inconsistent or unreliable AI recommendations can erode trust in the system, causing engineers to disregard valuable AI insights or overly rely on flawed outputs.

Complex Decision-Making: AI can handle more complex data, which might be beyond users’ comprehension, leading to judgment or execution errors.

Data Bias and Misinterpretation: AI systems can perpetuate biases from their training data, resulting in poor decisions.

Unexpected Failure Modes: Broadening AI’s remit increases the chance of unforeseen failures, for which users might not be prepared.

Transparency: As AI becomes more complex, it operates more like a "black box," hindering user trust and verification, leading to errors.

Supervision

Human-AI Collaboration: AI integration may disrupt team dynamics, leading to role confusion.

Communication breakdowns: Effective communication is crucial in any team setting, and the introduction of AI can disrupt established communication patterns.

Assumptions About AI Capabilities: Team members might overestimate the capabilities of AI, assuming it can handle tasks it wasn't designed for. This can lead to important tasks being overlooked or improperly executed.

Organisation

Data Quality and Management: Poor data quality or inadequate data security can lead to unreliable AI outputs and potential data breaches, jeopardising both operational integrity and confidentiality.

AI System Integration and Compatibility: Inadequate integration of AI systems with existing tools can cause workflow disruptions and operational inefficiencies. Incompatibility issues may prevent effective use of AI technologies.

Ethical and Regulatory Compliance: Failure to adhere to ethical guidelines and aviation regulations can result in legal penalties, reputational damage, and compromised safety standards.

Culture Readiness: A lack of organisational readiness to adopt AI can hinder successful implementation, leading to resistance from staff and a failure to realise AI's potential benefits.

Standard Operating Procedures (SOPs): Outdated or unclear SOPs can cause confusion and inconsistencies in AI usage, leading to potential safety risks and operational errors.

Conclusions

In the discussion of AI adoption within the aerospace sector, the emergence of human factors plays a critical role in shaping the success and safety of AI integration.

However, emerging human factors can introduce risks when adopting AI-based tools or systems.

Many identified contributing factors bring challenges that require a multifaceted approach to achieve effective and safe utilisation of AI technologies.

The following will continue this series with a focus on EASA's Building Block #3 and a deep dive into regulatory compliance to provide a more detailed insight into the structured framework necessary for integrating AI into aviation.

Stay tuned for an in-depth discussion on the strategic approach of merging human factors with AI as the landscape within aerospace moves forward.

This is all for today.

See you next week 👋

Disclaimer: The information provided in this newsletter and related resources is intended for informational and educational purposes only. It reflects both researched facts and my personal views. It does not constitute professional advice. Any actions taken based on the content of this newsletter are at the reader's discretion.