Assuring AI: Where Transparency Meets Human Understanding

EASA's AI Assurance framework ensures safe, transparent AI in aerospace, focusing on learning processes, MLOps, and explainability standards.

EASA has established guidelines within its AI Assurance framework, focusing on ensuring AI systems are developed with safety, transparency, and fairness at the forefront.

Specifically, Block #2 focuses into the learning, training, and output generation processes of AI systems.

This block examines the training data, algorithms, and methodologies used to ensure transparent and unbiased AI learning aligned with safety standards.

Furthermore, it underscores the need for AI systems to generate intelligible outputs for human operators, thus narrowing the divide between sophisticated models and human comprehension

In today’s article I cover:

Introduction to AI Assurance in Aerospace

What is Learning Assurance?

The significance of MLOps and its importance

Definition of Development and Post-Ops Explainability

Conclusions

Let’s dive in! 🤿

Introduction to AI Assurance in Aerospace

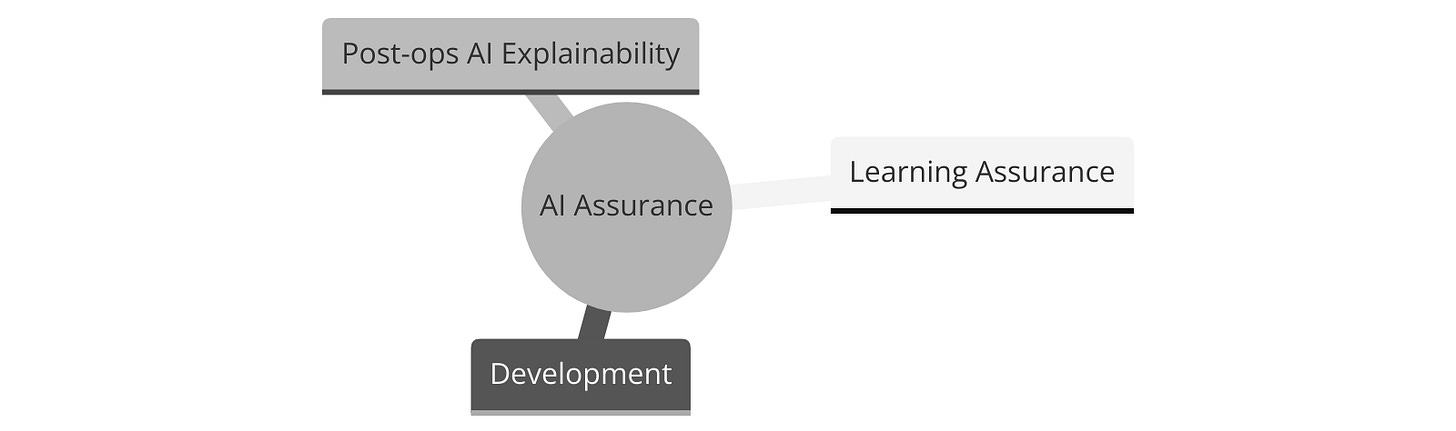

Keeping the "EASA Concept Paper: guidance for Level 1 & 2 machine learning applications" as reference, the main purposes of AI Assurance are:

combining system-centric guidance with an emphasis on human factors,

setting objectives for AI systems, acknowledging the specifics of ML techniques.

introducing Learning Assurance focusing on data management and learning processes, including reuse of AI/ML models and surrogate modelling

addressing transparency and explainability of ML models through Development and Post-Ops Explainability.

What is Learning Assurance?

In accordance with the EASA concept paper, Learning Assurance is:

“All of those planned and systematic actions used to substantiate, at an adequate level of confidence, that errors in a data-driven learning process have been identified and corrected such that the AI/ML constituent satisfies the applicable requirements at a specified level of performance, and provides sufficient generalisation and robustness capabilities.”

The essence is to assure that AI-based systems perform as anticipated, maintaining the expected performance level and ensuring model robustness.

EASA outlines process steps and structures for achieving these objectives, highlighting the iterative nature of training and implementation, alongside Quality and Process Assurance to meet lifecycle process objectives.

The integral process Quality and Process Assurance aims at ensuring that the life-cycle process objectives are met, and the activities have been completed as outlined in plans or that deviations have been addressed.

The significance of MLOps and its importance

The word MLOps is a compound of “machine learning” and the continuous development practice of DevOps in the software filed.

MLOps is a set of practices that aims to deploy and maintain machine leaning models in production reliably and efficiently.

In the context of safety-related systems, MLOps is particularly important as it helps to ensure that the ML models continuously deliver accurate and reliable results.

Some key features of Model Monitoring are :

Explainable AI: It enables understanding and trust AI decisions

Continuous evaluation: It streamlines and optimises model performance assessments.

Model drift: it detects decline in performance due to data changes.

Model risk management: it addresses losses from undeperforming models.

Definition of Development and Post-Ops Explainability

The drive for Development and Post-Operations AI explainability stems from the needs of stakeholders in both the development cycle and post-operational phase. EASA’s concept paper illustrates this necessity, highlighting the importance of making AI's decision-making processes understandable and transparent for all stakeholders involved.

Conclusions

EASA's AI Assurance framework represents a significant stride towards integrating AI into aerospace with a focus on safety, reliability, and transparency.

As the aerospace sector continues to embrace AI, guidelines like these ensure that technological advancements do not compromise safety standards but enhance them, fostering a future where AI and human capabilities are seamlessly integrated considering aerospace safety and efficiency.

References

EASA: EASA Concept Paper: Guidance for level 1 & 2 machine learning applications; March 2024.

Microsoft Azure: MLOps Blog Series Part 1: The art of testing machine learning systems using MLOps. Accessed March 2024

This is all for today.

See you next week 👋

Disclaimer: The information provided in this newsletter and related resources is intended for informational and educational purposes only. It reflects both researched facts and my personal views. It does not constitute professional advice. Any actions taken based on the content of this newsletter are at the reader's discretion.