Making positive impact with Human Centered AI (HCAI) in Aerospace

HCAI, or Human Centered AI, aims to augment human capabilities while maintaining control and trust. Explore its key aspects and applications.

In my AI learning journey I have come across wit HCAI, that stands for Human Centered AI.

It’s about AI helping humanity for a better future.

Does this sound like a utopia to you?

HCAI aims to augment and complement human capabilities rather than replace them.

With the Human in the “center”, keeping the control and AI meeting our needs in a transparent, respectful and trustworthy way.

Today I cover:

What is HCAI

Key aspects of HCAI

A first approach to HCAI governance

Some conceptual applications of HCAI in aerospace

Conclusions

Resources to learn more

Let’s dive in! 🤿

What is HCAI

As Stanford University, professor Melissa Valentine says in her interview for McKinsey Talks Talent:

So generative AI; it’s just data. It’s just a language model. But it’s also all the social arrangements that have to happen around it for it to actually accomplish any of the things where we see the potential.

Actually, there is already an emerging discipline driven by prestigious institutions, research institutes and corporations called Human Centered AI (HCAI) that is focused on the design and implementation of AI-based systems that prioritise:

human values

social needs and

ethical considerations.

Key aspects of HCAI

HCAI is focused on long term sustainability.

The key aspects of HCAI to enhance human capabilities and well-being are:

User-Centric Design: Developing AI systems with a strong focus on the end-user's experience, needs, and feedback.

Ethical Considerations: Embedding ethical principles in the AI development process to address issues like fairness, privacy, transparency, accountability, and avoiding bias.

Empowerment: Designing AI to augment human abilities, support decision-making, and empower users, rather than replacing or diminishing human roles and capabilities.

Transparency and Explainability: Making AI systems transparent, with decisions that are understandable and explainable to users.

Safety and Reliability: Ensuring AI systems are safe, secure, and reliable, minimizing risks to individuals and society, and enabling users to have confidence in the technology.

Collaborative Development: Involving stakeholders, including potential users and different types of experts in the development process to ensure the technology is aligned with human values and societal norms.

Regulatory Compliance: Adhering to legal and regulatory frameworks designed to protect individual rights and promote public good.

An approach to HCAI Governance

Reference to HCAI governance, it's encouraging to have started reading the book "Human-Centered AI" by Ben Shneiderman.

I'm eager to share the author's insights with you in the near future.

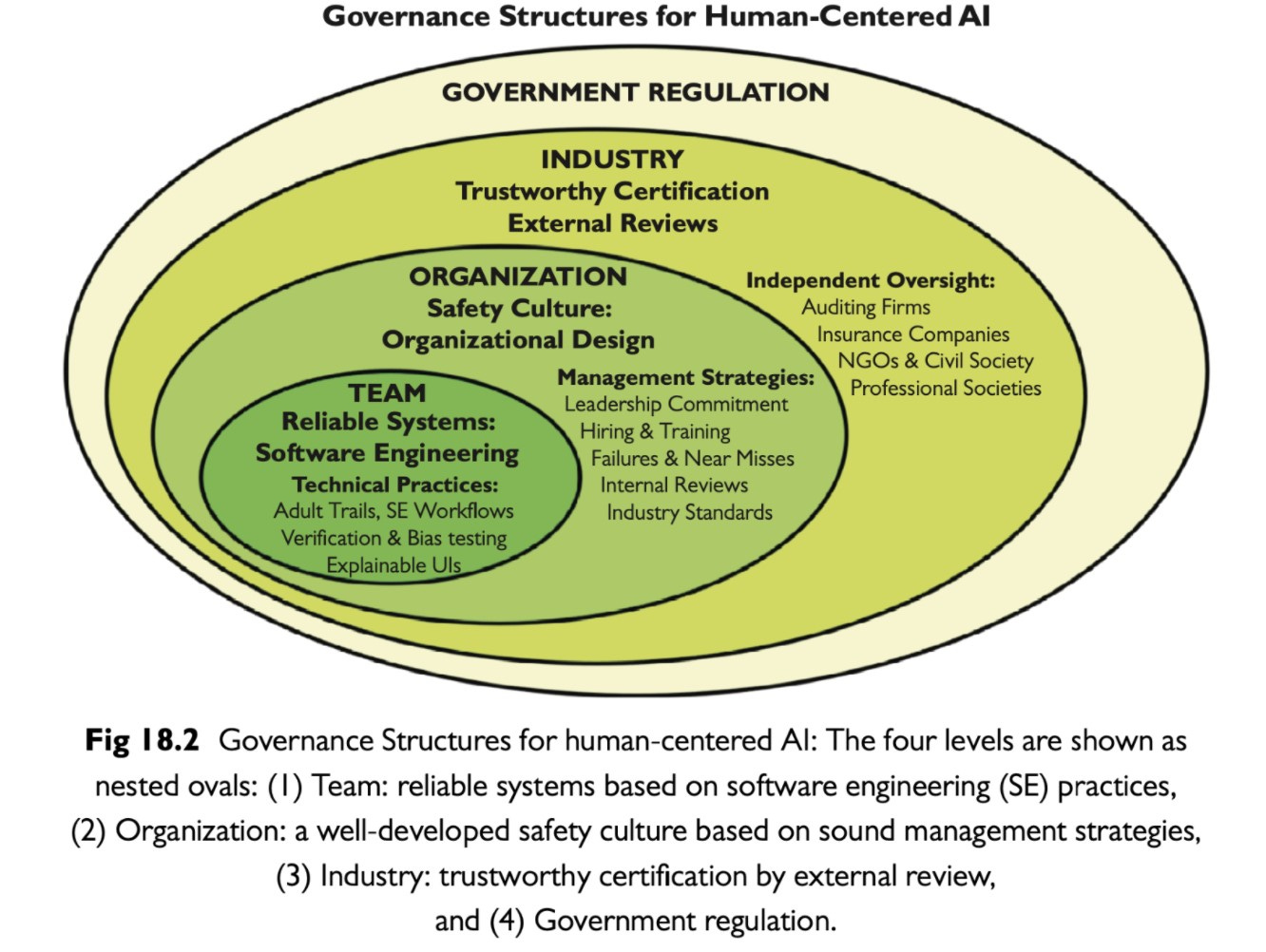

To have a first approach to HCAI's governance framework, the proposal is to identify 4 layers to build human-centered reliable systems:

The Team (ensuring reliable systems: software engineering)

The Organisation (fostering a safety culture: organizational design, management strategies)

The Industry (certifying trustworthiness: external reviews, independent oversight)

Government Regulation

The book presents 15 practices across the different layers to enhance AI's safety, reliability, and trust, promoting technologies that advance human values and mitigate risks.

The picture below is taken from Chapter 18.

Some conceptual applications of HCAI in Aerospace

Right, so bearing in mind that HCAI puts the human being at the center, I've compiled three examples of how AI could be implemented with a Human-Centered approach in the Aerospace industry:

Flight Training and Simulation: HCAI can personalize training programs for pilots and crew members by analysing their performance, learning pace, and areas needing improvement. This can help in creating more effective training modules that adapt to individual learning styles, enhancing the overall training experience.

Maintenance and Diagnostics: By incorporating human feedback into AI algorithms, aerospace companies can develop predictive maintenance tools that are more aligned with technicians' expertise and workflows. This not only improves the accuracy of diagnostics but also empowers maintenance staff by providing them with tools that complement their skills and experience.

Safety and Risk Analysis: AI systems can analyse vast amounts of data from flight operations, maintenance records, and environmental factors to identify potential safety risks before they become critical. By designing these systems to work collaboratively with safety analysts, HCAI can enhance the industry's ability to predict and mitigate risks.

Conclusions

It’s evident that incorporating HCAI in aerospace requires a multidisciplinary approach, involving collaboration between AI, organizational, and industry experts.

This ensures that AI applications are developed and implemented in ways that truly enhance human capabilities and contribute positively to the aerospace industry.

The challenge, from my point of view, lies in creating all these social arrangements and recognised governance framework structures.

Why am I so excited?

Because being part of creating something that will mark the next human era is a significant responsibility but also incredibly encouraging to see the great potential.

Want to learn more?

See below some interesting references:

This is all for today.

See you next week 👋

Disclaimer: The information provided in the newsletter and related resources is intended for informational and educational purposes only. It does not constitute professional advice, and any actions taken based on the content are at the reader's discretion.