Decoding Risk of AI Implementation in Aerospace

Let's walk through an example to understand how EASA objectives are levelled based on risk when implementing an AI-based system in aerospace.

In the document "EASA Concept Paper: First usable guidance for Level 1 & 2 machine learning applications", EASA establishes practical objectives to serve as a foundation when formal regulations come into effect.

Is your organisation ready to implement AI-based systems?

I strongly recommend understanding the four essential "building blocks" of AI trustworthiness, along with customising their objectives and applying the proportionality criteria.

In today's article, I aim to introduce you to what EASA highlights regarding key considerations when adopting a risk-based approach for implementing AI-based systems.

Let's explore through an example:

The Objectives and Means of Compliance

The Criteria of Proportionality

The AI level

The Level of Criticality

The Risk-based levelling of Objectives

Conclusions

Enjoy the read! 💪

The Objectives ans Means of Compliance

As introduced in the article The Regulatory Landscape of AI in Aerospace, four building blocks are essential for establishing trustworthy criteria in the use of AI technology.

EASA has formulated specific objectives for each building block and provided anticipated Means of Compliance (MOC) when applicable to clarify the expectations behind these objectives.

The Criteria of Proportionality

Two main criteria can be utilized to anticipate the application of proportionality for the Means of Compliance in AI-based system applications:

The AI Level: Addressed by EASA in Block #1, focusing on AI trustworthiness analysis of the AI-based system.

The Level of Criticality of the AI System: Addressed by EASA in Block #2, emphasizing AI assurance of the AI-based system.

The AI level

In the newsletter about “AI Use Cases Tailored for Part 145 Organisations” I reviewed the AI Level and we saw EASA identifies three levels of AI systems, each escalating as it reduces the authority of the end user.

Let’s understand the concept through an example:

Imagine having an AI-based system that generates a Maintenance Plan schedule (CAMO organisation) based on data, such as the Operator Maintenance Programme.

The model could be trained to evaluate the data and provide the CAMO with scheduled inspections at the most appropriate time.

In this case, the application of proportionality would depend on whether the system only supports the analysis or decision-making for the planning engineer (human augmentation - level 1). The final decision is complex, as the planning engineer must also consider other factors such as fleet availability, hangar space, resource availability, etc.

However, if the system was upgraded to plan inspections automatically, considering all aspects, it could even reach level 3.

Therefore, the AI Level is one of the criteria necessary in the Risk-based levelling of objectives, and EASA has presented them using the below colour code as key to interpret the application of proportionality.

The Level of Criticality

Regarding the criticality of the AI system, with the current state of knowledge, EASA anticipates limitations on the validity of the models depending on the Assurance Levels.

As the EASA guide explains: 'Depending on the safety criticality of the application and the aviation domain, an assurance level is allocated to the AI-based (sub)system (e.g., development assurance level (DAL) for initial and continuing airworthiness or air operations, or software assurance level (SWAL) for air traffic management/air navigation services (ATM/ANS).'

For example, for initial or continuous airworthiness or air operations, assurance levels range from catastrophic to minor, depending on the safety margins if the AI application fails.

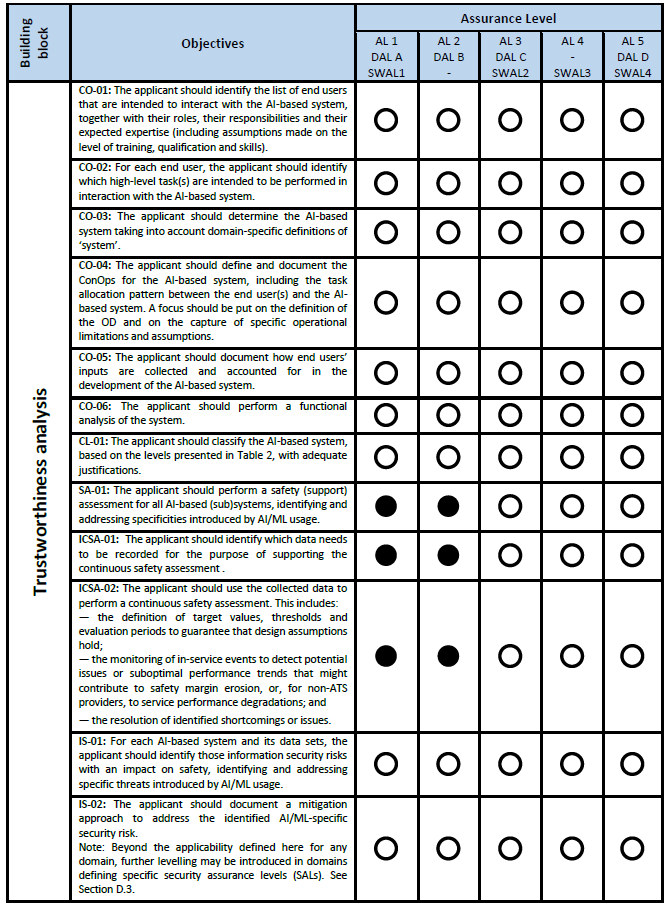

The table below shows the different levels for embedded systems based on the development assurance level (DAL), which goes from A to D.

This classification is based on the standards for aircraft fabrication ED79A/ARP4754A and ARP4761, which focus on both software and hardware development and safety assessment processes, respectively.

Following the previous example regarding a Maintenance Plan, we can agree it would be DAL A, as if the AI-based system fails, it could lead to the loss of inspections that, over time, could have catastrophic consequences.

As seen, the level of criticality is the second criteria necessary in the Risk-based leveling of objectives.

EASA uses the below legend as a key to interpret the application of proportionality.

The Risk-based levelling of Objectives

Now that you understand the necessary criteria for interpreting the Risk-based levelling of objectives, let’s revisit the previous example of a Maintenance Plan using an AI-based system.

In this particular case, the criteria would be:

The AI level: Level 1 - It supports the planning engineer in the analysis or decision-making. The planning engineer has full authority and will consider other factors such as fleet availability, hangar space, resource availability, etc.

The Criticality: DAL A - If the AI-based system fails, it could lead to the loss of inspections, which over time could have catastrophic consequences.

As mentioned above, each EASA Building Block has a set of objectives. Let’s take the objectives from the EASA guidance as examples. Specifically, Building Block #1: Trustworthiness Analysis:"

As illustrated, the first column (DAL A) would be applicable, implying that, according to the legends presented throughout this article:

Objectives SA-01, ICSA-01, ICSA-02 will need to be independently satisfied, prompting an independent or third-party audit.

The remaining objectives only require evidence of satisfaction.

Conclusions

While not yet formal regulation, this guidance significantly directs the path towards compliance and trustworthiness in AI-based systems.

It offers insight into EASA's potential expectations for approval.

For those contemplating the integration of an AI-based system into organizational processes, especially Safety and Compliance professionals, I strongly recommend considering EASA's guidelines in your AI journey.

It's crucial for the industry to comprehend these requirements and collaborate with AI providers or have in-house AI specialists to ensure future compliance needs are met.

Disclaimer: The information provided in the newsletter and related resources is intended for informational and educational purposes only. It does not constitute professional advice, and any actions taken based on the content are at the reader's discretion.